Once you have setup python environment, now we can install chainer.

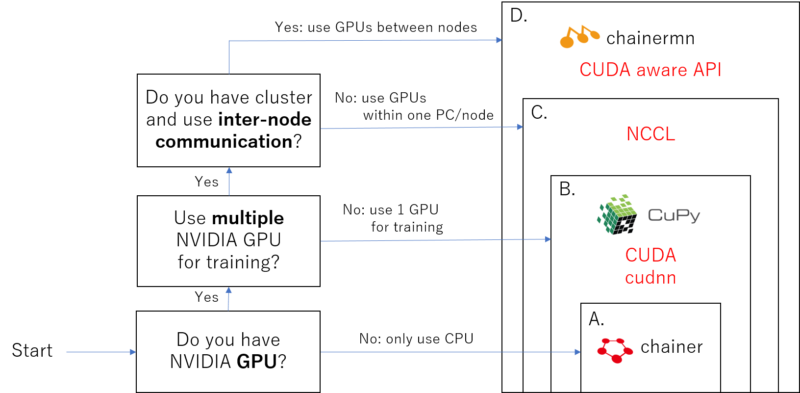

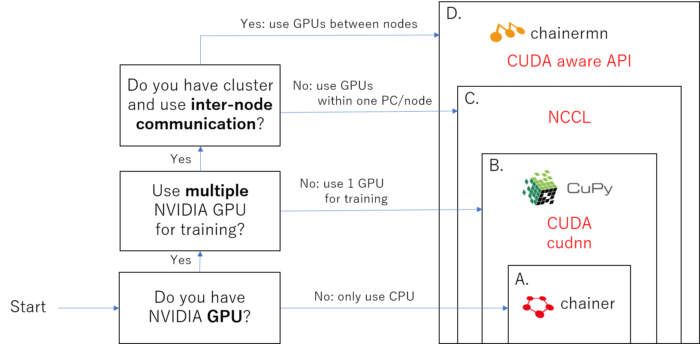

Nowadays it is important for deep learning library to use GPU to enhance its calculation speed, and Chainer provides several levels of GPU support.

The packages which you need to install depends on your PC envitonment, please follow this figure to understand what category you are in.

Note that the upper category includes the lower dependencies. For example, if you are categorized to B., then you need to install A. chainer and B. Cupy, CUDA, cudnn.

I think most of the users are categorized as A. or B., C. and D. are for only professionals.

Contents

A: CPU user

If you don’t have NVIDIA GPU and GPU support is not necessary, you only need to install chainer.

The difference of using GPU (category B., C., D.) is basically only the calculation speed, you can enjoy trying all the functionality of chainer.

※ It is ok to try using chainer with only CPU. But when you want to seriously run deep learning code with big data (such as images etc), you might feel it runs too slow with only CPU.

Install chainer

pip install chainer

Just one line, that’s all :).

Update chainer

Chainer development is very active and its version update is released very often (see Release Cycle for details).

To check the current version: you can type following in command line,

python -c "import chainer; print(chainer.__version__)"

To install latest version of chainer

pip install -U chainer

B: 1 GPU user

If you have NVIDIA GPU, you can get benefit for calculation enhancement by installing cupy, which is like GPU version of numpy. GPU is good at parallel computing, and once you setup CUDA, cudnn, and cupy it may sometimes more than 10 times faster than using only CPU, depending on the task (for example, CNN used in image processing can get this benefit a lot!).

Before installing cupy, you need to install/setup CUDA and cudnn library.

Install CUDA

According to the official website of CUDA,

CUDA® is a parallel computing platform and programming model invented by NVIDIA. It enables dramatic increases in computing performance by harnessing the power of the graphics processing unit (GPU).

Please follow official download page to get CUDA library and install it.

Install cudnn

The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks.

Even without cudnn, and only with CUDA, chainer can use GPU calculation. However if you install cudnn, its calculation is more highly optimized. Especially, GPU memory usage reduces dramatically and thus I recommend to install cudnn as well.

Please go to cudnn download page to get cudnn library and install. You might need to register membership to download cudnn library.

Install cupy

pip install cupy

Basically that’s all. See install cupy for details.

When you install CuPy without CUDA, and after that you want to use CUDA, please reinstall CuPy. You need to reinstall CuPy when you want to upgrade CUDA.

Reinstall chainer, cupy

When you reinstall the package, it is better to specify explicitly not to use cached file.

pip uninstall chainer pip uninstall cupy pip install chainer --no-cache-dir -vvvv pip install cupy --no-cache-dir -vvvv

C: Multiple GPUs within 1 PC

If you have more than one GPU, and you want to use these GPUs in one model training, you can use MultiProcessParallelUpdater module in chainer.

To use this module, you need to install NCCL library.

※ If you are not going to train the model with multiple GPUs, you can skip installing NCCL Even you have multiple GPUs. For example, you can run training process for “model A” with gpu 0, and another training process for “model B” with GPU 1 at the same time without NCCL setup.

Install NCCL

NCCL (pronounced “Nickel”) is a stand-alone library of standard collective communication routines, such as all-gather, reduce, broadcast, etc., that have been optimized to achieve high bandwidth over PCIe.

Please follow official github page to install NCCL.

Below was my case, but it might change in the future.

cd your-workspace-path git clone https://github.com/NVIDIA/nccl cd nccl # use your own CUDA_HOME path make CUDA_HOME=/usr/local/cuda-8.0 test

After installed NCCL, please setup the PATH as well.

export NCCL_ROOT=<path to NCCL directory> export CPATH=$NCCL_ROOT/include:$CPATH export LD_LIBRARY_PATH=$NCCL_ROOT/lib/:$LD_LIBRARY_PATH export LIBRARY_PATH=$NCCL_ROOT/lib/:$LIBRARY_PATH

and then reinstall chainer and cupy. If successful, you can use MultiProcessParallelUpdater for multiple GPUs model training.

D: Cluster with multiple GPUs

This is specially professional usage where you are setting up GPU clusters.

You can use chainermn for multi-node, distributed deep learning training.

It is reported that chainermn scales almost linearly even using 128 GPUs, and completes ImageNet training in 4.4 hours.

Install CUDA aware API

Please follow official chainermn documentation.

Install chainermn

pip install chainermn

For details, please follow official chainermn documentation.