Long Short Term Memory

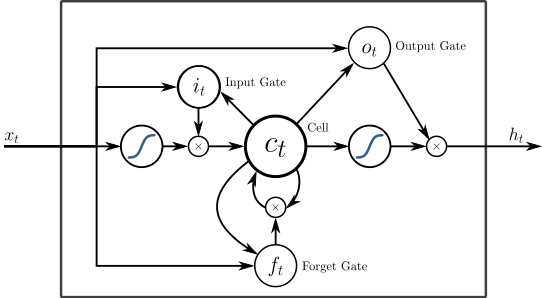

Long short term memory is advanced version of RNN, which have “Cell” c to keep long term information.

LSTM network Implementation with Chainer

LSTM function and link is provided by Chainer, so we can just use it to construct a neural network with LSTM.

Sample implementation is following, (referred from official example code)

import numpy as np

import chainer

import chainer.functions as F

import chainer.links as L

# Copied from chainer examples code

class RNNForLM(chainer.Chain):

"""Definition of a recurrent net for language modeling"""

def __init__(self, n_vocab, n_units):

super(RNNForLM, self).__init__()

with self.init_scope():

self.embed = L.EmbedID(n_vocab, n_units)

self.l1 = L.LSTM(n_units, n_units)

self.l2 = L.LSTM(n_units, n_units)

self.l3 = L.Linear(n_units, n_vocab)

for param in self.params():

param.data[...] = np.random.uniform(-0.1, 0.1, param.data.shape)

def reset_state(self):

self.l1.reset_state()

self.l2.reset_state()

def __call__(self, x):

h0 = self.embed(x)

h1 = self.l1(F.dropout(h0))

h2 = self.l2(F.dropout(h1))

y = self.l3(F.dropout(h2))

return y

Update: [Note]

self.params() will return all the “learnable” parameter in this Chain class (for example W and b in Linear link to calculate x * W + b)

Thus, below code will replace all the initial parameter by uniformly distributed value between -0.1 and 0.1.

for param in self.params():

param.data[...] = np.random.uniform(-0.1, 0.1, param.data.shape)

Appendix: chainer v1 code

It was written as follows until chainer v1. From Chainer v2, the train flag in function (ex. dropout function) has been removed ans chainer global config is used instead.

import numpy as np

import chainer

import chainer.functions as F

import chainer.links as L

# Copied from chainer examples code

class RNNForLM(chainer.Chain):

"""Definition of a recurrent net for language modeling"""

def __init__(self, n_vocab, n_units, train=True):

super(RNNForLM, self).__init__()

with self.init_scope():

self.embed = L.EmbedID(n_vocab, n_units)

self.l1 = L.LSTM(n_units, n_units)

self.l2 = L.LSTM(n_units, n_units)

self.l3 = L.Linear(n_units, n_vocab)

for param in self.params():

param.data[...] = np.random.uniform(-0.1, 0.1, param.data.shape)

self.train = train

def reset_state(self):

self.l1.reset_state()

self.l2.reset_state()

def __call__(self, x):

h0 = self.embed(x)

h1 = self.l1(F.dropout(h0, train=self.train))

h2 = self.l2(F.dropout(h1, train=self.train))

y = self.l3(F.dropout(h2, train=self.train))

return y