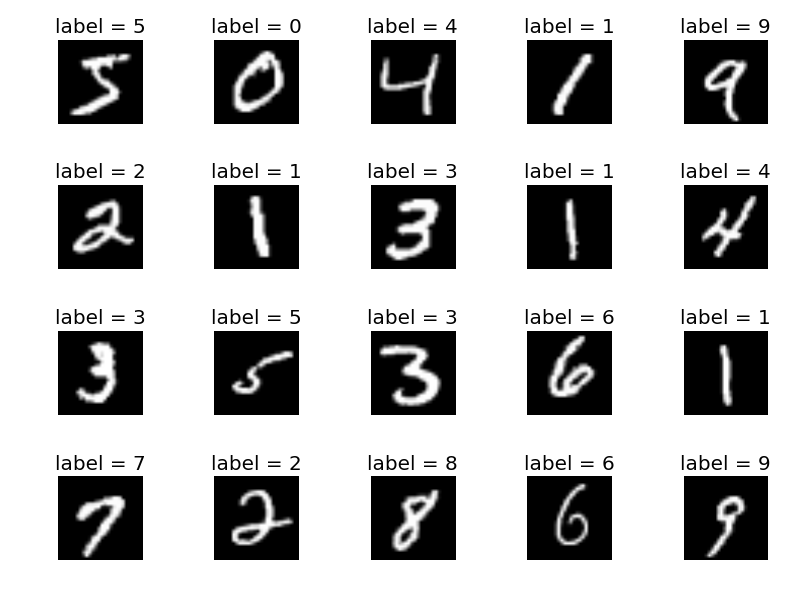

MNIST dataset

MNIST (Mixed National Institute of Standards and Technology) database is dataset for handwritten digits, distributed by Yann Lecun’s THE MNIST DATABASE of handwritten digits website.

The dataset consists of pair, “handwritten digit image” and “label”. Digit ranges from 0 to 9, meaning 10 patterns in total.

- handwritten digit image: This is gray scale image with size 28 x 28 pixel.

- label : This is actual digit number this handwritten digit image represents. It is either 0 to 9.

MNIST dataset is widely used for “classification”, “image recognition” task. This is considered as relatively simple task, and often used for “Hello world” program in machine learning category. It is also often used to compare algorithm performances in research.

Handling MNIST dataset with Chainer

For these famous datasets like MNIST, Chainer provides utility function to prepare dataset. So you don’t need to write preprocessing code by your own, downloading dataset from internet, and extract it, followed by formatting it etc… Chainer function do it for you!

Currently,

- MNIST

- CIFAR-10, CIFAR-100

- Penn Tree Bank (PTB)

are supported, refer Official document for dataset.

Let’s get familiar with MNIST dataset handling at first. Below codes are based on mnist_dataset_introduction.ipynb. To prepare MNIST dataset, you just need to call chainer.datasets.get_mnist function.

import chainer # Load the MNIST dataset from pre-inn chainer method train, test = chainer.datasets.get_mnist()

If this is first time, it starts downloading the dataset which might take several minutes. From second time, chainer will refer the cached contents automatically so it runs faster.

You will get 2 returns, each of them corresponds to “training dataset” and “test dataset”.

MNIST have total 70000 data, where training dataset size is 60000, and test dataset size is 10000.

# train[i] represents i-th data, there are 60000 training data

# test data structure is same, but total 10000 test data

print('len(train), type ', len(train), type(train))

print('len(test), type ', len(test), type(test))

len(train), type 60000 <class 'chainer.datasets.tuple_dataset.TupleDataset'>

len(test), type 10000 <class 'chainer.datasets.tuple_dataset.TupleDataset'>

I will explain about only train dataset below, but test dataset have same dataset format.

train[i] represents i-th data, type=tuple(x_i, y_i), where x_i is image data in array format with size 784, and y_i is label data indicates actual digit of image.

print('train[0]', type(train[0]), len(train[0]))

train[0] <class 'tuple'> 2

print(train[0])

(array([ 0., 0., 0., ..., 0., 0., 0.], dtype=float32), 5)

x_i information. You can see that image is represented as just an array of float numbers ranging from 0 to 1. MNIST image size is 28 × 28 pixel, so it is represented as 784 1-d array.

# train[i][0] represents x_i, MNIST image data,

# type=numpy(784,) vector <- specified by ndim of get_mnist()

print('train[0][0]', train[0][0].shape)

np.set_printoptions(threshold=np.inf)

print(train[0][0])

train[0][0] (784,) [ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.01176471 0.07058824 0.07058824 0.07058824 0.49411768 0.53333336 0.68627453 0.10196079 0.65098041 1. 0.96862751 0.49803925 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.11764707 0.14117648 0.36862746 0.60392159 0.66666669 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.88235301 0.67450982 0.99215692 0.94901967 0.76470596 0.25098041 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.19215688 0.9333334 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.98431379 0.36470589 0.32156864 0.32156864 0.21960786 0.15294118 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.07058824 0.8588236 0.99215692 0.99215692 0.99215692 0.99215692 0.99215692 0.77647066 0.71372551 0.96862751 0.9450981 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.3137255 0.61176473 0.41960788 0.99215692 0.99215692 0.80392164 0.04313726 0. 0.16862746 0.60392159 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.05490196 0.00392157 0.60392159 0.99215692 0.35294119 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.54509807 0.99215692 0.74509805 0.00784314 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.04313726 0.74509805 0.99215692 0.27450982 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.13725491 0.9450981 0.88235301 0.627451 0.42352945 0.00392157 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.31764707 0.94117653 0.99215692 0.99215692 0.4666667 0.09803922 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.17647059 0.72941178 0.99215692 0.99215692 0.58823532 0.10588236 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.0627451 0.36470589 0.98823535 0.99215692 0.73333335 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.97647065 0.99215692 0.97647065 0.25098041 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.18039216 0.50980395 0.71764708 0.99215692 0.99215692 0.81176478 0.00784314 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.15294118 0.58039218 0.89803928 0.99215692 0.99215692 0.99215692 0.98039222 0.71372551 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.09411766 0.44705886 0.86666673 0.99215692 0.99215692 0.99215692 0.99215692 0.78823537 0.30588236 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.09019608 0.25882354 0.83529419 0.99215692 0.99215692 0.99215692 0.99215692 0.77647066 0.31764707 0.00784314 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.07058824 0.67058825 0.8588236 0.99215692 0.99215692 0.99215692 0.99215692 0.76470596 0.3137255 0.03529412 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.21568629 0.67450982 0.88627458 0.99215692 0.99215692 0.99215692 0.99215692 0.95686281 0.52156866 0.04313726 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.53333336 0.99215692 0.99215692 0.99215692 0.83137262 0.52941179 0.51764709 0.0627451 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. ]

y_i information. In below case you can see that 0-th image has label “5”.

# train[i][1] represents y_i, MNIST label data(0-9), type=numpy() -> this means scalar

print('train[0][1]', train[0][1].shape, train[0][1])

train[0][1] () 5

Plotting MNIST

So, each i-th dataset consists of image and label

– train[i][0] or test[i][0]: i-th handwritten image

– train[i][1] or test[i][1]: i-th label

Below is a plotting code to check how images (this is just an array vector in python program) look like. This code will generate the MNIST image which was shown in the top of this article.

import chainer

import matplotlib

import matplotlib.pyplot as plt

%matplotlib inline

# Load the MNIST dataset from pre-inn chainer method

train, test = chainer.datasets.get_mnist(ndim=1)

ROW = 4

COLUMN = 5

for i in range(ROW * COLUMN):

# train[i][0] is i-th image data with size 28x28

image = train[i][0].reshape(28, 28) # not necessary to reshape if ndim is set to 2

plt.subplot(ROW, COLUMN, i+1) # subplot with size (width 3, height 5)

plt.imshow(image, cmap='gray') # cmap='gray' is for black and white picture.

# train[i][1] is i-th digit label

plt.title('label = {}'.format(train[i][1]))

plt.axis('off') # do not show axis value

plt.tight_layout() # automatic padding between subplots

plt.savefig('images/mnist_plot.png')

plt.show()

[Hands on] Try plotting “test” dataset instead of “train” dataset.